Understand and Unlock the Power of Data-Driven Testing

In the world of software development, in-depth testing is key to producing quality applications. One approach that stands out for its scalability is Data-Driven Testing (DDT). This method is powerful in automated setups. It allows multiple sets of inputs and the corresponding expected results to be tested efficiently.

This guide explains and addresses key areas around DDT. Such as, What is Data-Driven Testing? How does it work? How can you implement it effectively?

What is data-driven testing?

Data-driven testing is a testing technique that separates the test logic from the test data. The script contains the logic, while the test data is stored in files or databases. This separation allows testers to execute multiple scenarios using a single script.

Common data sources used in data-driven testing include one of the below formats.

- CSV files: These are the easiest to use; the data is organized row-wise, making it simple to read and update.

- Databases: The additions and updates of data sets involve interaction with the database tables. Some stakeholders may lack technical knowledge of databases. It would require an additional layer of GUI to make this interaction available to everyone.

- JSON files: Data is represented in a key-value format in JSON files. The reader of this file should have a general overview of the JSON format.

- XML files: XML files have HTML tags to define the data structure. The user would require some training before using these files.

You can build a robust testing setup by structuring data in one of these formats.

Why use data-driven testing?

In traditional test automation, test data is hardcoded into scripts. This approach redundantly increases the code lines when multiple data sets are to be checked. While suitable for small cases, this approach becomes inefficient and hard with varied input scenarios.

Since the data is maintained outside the code in DDT, it's easier to manage. Some advantages of DDT are:

- Reusability and time saving: One script can be used for multiple input data sets, thus reducing the coding and testing efforts.

- Improved coverage: The testers can keep incorporating a wide range of data sets in the data source. The inputs can include valid datatypes, invalid data sets, boundary data sets, and empty data sets.

- Simplified maintenance: Updating a test case is as simple as modifying the data source. Non-technical team members can add or update test scenarios without changing the script itself.

- Regression friendly: Perfect for repetitive tests across versions and releases

The simplicity of DDT varies depending on the data source chosen. Files are relatively simpler to manage compared to databases. Documentation around the data set aids in the effective use of these data sources.

Data-driven testing in practice: A real-life example - Login form

Let say you are testing a login form on a website. The form accepts the data inputs for username and password. The form has a submit button that has to be clicked after filling out the form. Rather than writing separate tests, you can create a data table with these fields: username, password, expected output and automated script output. Each test cycle pulls data from a row and submits the form. The output (success message or validation error) is compared with the expected output. The output is then stored back in the 'automated script output' column.

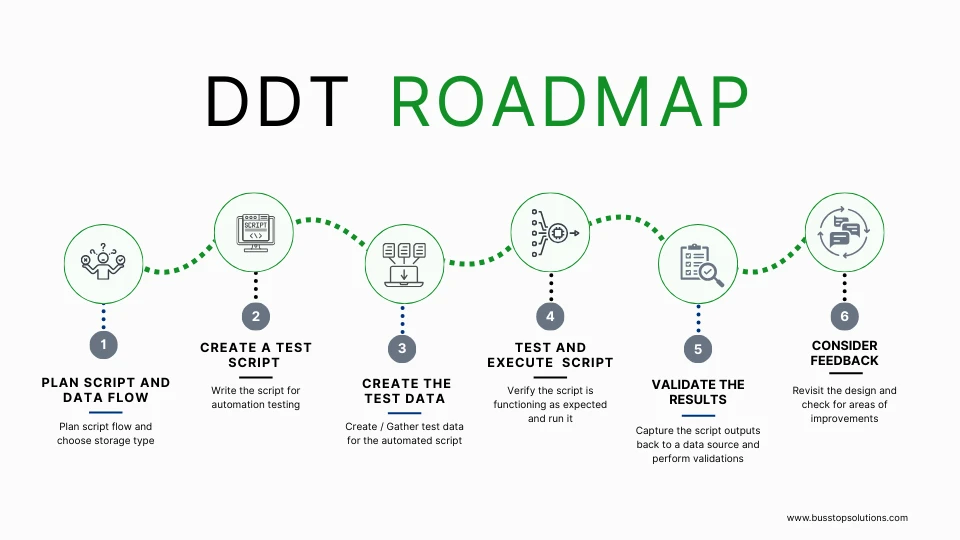

How a data driven approach can be implemented?

Step 1: Plan the script and data flow: The questionnaire below can help you with the decision.

- Where to store the data? In file format or a database setup? Which file format - CSV, JSON, XML?

- Should the data have only the input data or even the expected output?

- How to store and report the script's output? Should the timestamp be recorded?

- How to prompt the users when the script fails to execute?

- Which assertions or checks will the script perform? For example, the system will check if the login was successful or not.

Step 2: Create the test script: Build a script that performs the desired testing action. Common action examples are form submission, login verification, adding items to a cart, etc. The script should accept input values dynamically from the data source. The script should handle the output in one of the below manner

- Testing report of all the test data that were checked with the automated script

- Error handling in the script when an unexpected behavior was encountered during the script execution

- Perform basic assertions, if possible

- Generate a testing report after it completes a round of testing

Step 3: Create the test data: Add the test data to the external data source. Try to cover the basics, such as valid, invalid, boundary, and empty data sets. Additional columns, such as comments, tags, expected output, etc, provide a holistic picture.

Step 4: Test and execute the script: The script should read the data inputs from the data source and execute its logic. The script should re-run for each different set of data from the source.

Step 5: Validate the results: The script captures and compares the output with the expected result listed across the data set. This process helps test not only functionality but also edge cases and error handling. In case automated validation isn't possible, capturing the system output is recommended. The captured output helps in the manual verification of results.

Best Practices for Effective Data-Driven Testing

To get the best results from data-driven testing, follow these recommendations:

1. Keep data organized

- Keep test data simple and readable.

- Use well-structured files and consistent naming.

- Create data sets that are independent of each other.

For example, suppose you want to test if a user is blocked after three login failures. The script should take invalid data and execute the login process three times automatically. Testers should not write three separate rows of invalid data to check this functionality. These additional rows lead to dependency and the respective data points should be manually tracked going forward.

2. Separate Logic and Data

Ensure that your scripts do not include hardcoded values. All inputs and expected results should come from external files. Do a code review with a peer to ensure no data is hardcoded.

3. Use Positive and Negative Test Cases

Add invalid or boundary condition data to check system responses with different kinds of input. You can tag the test data in a comments section in the data source.

4. Avoid Manual Triggers

Wherever possible, try to automate the execution of test scripts via CI/CD pipelines. Or schedule specific scripts as per the project needs. Timed scripts reduce human error and speed up feedback.

Common Challenges in DDT

Data challenges:

- Ensuring test data stays in sync with application logic can be tricky. Here, assertions through scripts can help to identify which data sets need an update.

- Storing sensitive data (such as passwords) in plain files can introduce security risks.

- Data creation in some domains involves a larger and more complex process. It might not be as straightforward as a basic login form.

Result management:

- When running multiple iterations with different data points, a need for reporting arises. An ideal report would contain the test data along with its outputs and checks performed.

- Additional human checks are required to interpret if the tests are performed as expected.

Programming challenges:

- Testers need scripting experience, which is out of their area of expertise.

- Building the framework and data structure takes time initially.

- Sometimes, one bad data row can break the loop. Scripts should be robust to handle such errors.

- While maintenance is easier, it still requires ongoing attention, especially when UI or APIs change.